25 Jun 2023 - Day 1 of self synthetization

The project I'm working on right now deals with what happens when we all have a synthetic self (a concept reminiscent to an older project of mine, Smart You). How will our interpersonal relations change when we will deploy our synthetic selfs to relate with others?

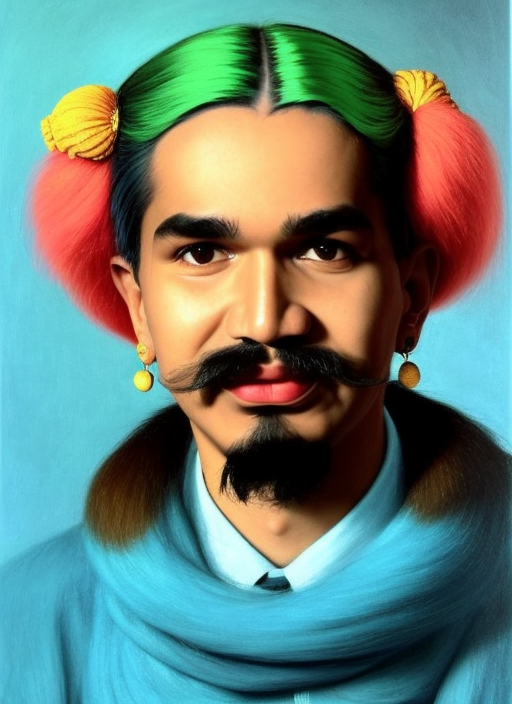

I thought to myself that the best way to deeply understand this dynamic, is through synthesizing myself. The images you see here are all generated with a fine-tuned model of Stable Diffusion.

I used a dataset of roughly 100 cherry-picked photos of myelf, throughout the last years. While I will keep on finetuning it with better parameters, having the ability to generate myself changed something in me.

I tried to play with gender, seeing how much I could warp it. As a non-binary person I felt I could explore ways of expressing myself that just weren't possible before. Having the ability to not only warp myself in time and space, but also in gender, ethnicity, and so much more, is a weird power to have...

...I played around with this last night for a bit too long. I need to admit that after all that, I went to the bathroom before going to sleep, and there was a small moment when I saw myself in the mirror and I felt an uncanny feeling.

2 Jul 2023 - Day 8 of self synthetization

This tech is going to fully radicalise our perception of the self, since boundaries which are not usually crossed, can be crossed with the tip of a few words. Not only we can change the setting we are in, we can change how we are portrayed and our identity. Gender fluidity is going to strive in this, as it allows people to transition easily to any gender. But it does not stop there: you can also transition in any ethnicity, age, species, year etc…

While open source will continue to exist, most people will use the gen models provided by big tech, probably Google, Meta, Microsoft, NVIDIA… These models, while accessible and free, can’t generate everything. There are certain limitations imposed by the company, which denies generation of specific spaces in latent space: the one deemed not appropriate. If this can be set as true in the near future, will we be able to change gender with these closed models? Will we be able to change ethnicity, age…

The synthetic friends we interact with are therefore a forcibly reduced versions that can’t have not appropriate outputs. You can’t speak with your friend about conspiracy theories, NSFW try any inappropriate topics, because they would react like ChatGPT reacts when prompted with such themes

5 Jul 2023 - Day 11 of self synthetization

Am I synthiola?

Am I the set of patterns generated by a diffusion model? Or is it a predetermined fate?

How far does the blending between me and the representation mimicking me go?

7 Jul 2023 - Day 13 of self synthetization

But what am I experimenting here with. Is this my doom, self-exploration or nothing really? Should I be playing with forces like this, whose effects on the human we don’t know? I do feel that it will be mostly being ahead of the market, in the sense that it feels like it won’t take long for companies to be selling this synthetization process as a product: to create any kind of media of yourself. Like a videogame, where you can enter the text, the voice, images and stuff, create any media you want with yourself.

Synthetic replica

Synthetic clone

Synthetic self

Smart you

AI you

AI U

It’s always synthiola in Philadelphia

iSynthiola

23 Jul - Day 29 of self synthetization

This is a crazy ride.

This last month I've been generating tons of images with my synthetic self, amounting to 1686 images as of now. Seeing my visual identity transition into a token I could insert and morph as I wanted, I'm starting to get a better sense of what dangers lie in such practice.

First of all, I realized that naming my synthetic self was key to perceive it as an alter ego rather than my own self. It allowed me to see it as a reflection, an avatar of myself.

All around it highlighted me the amount of media fluidity it provides. If the internet already felt to make media more fluid, as everyone had access to produce and share anything, now the are no boundaries. While before we couldn't just easily manufacture a clip of ourselves in "It's Always Sunny in Philadelphia", now the are no skill-based boundaries that stop me to do it in one evening.

I've been reduced to some image patterns and it definitely challenges my own individuality. It's not just my ability to see myself as a woman, as an old person, as a person of color, as both the cop and the protester. It also puts into question if who I am is only someone with colored hair and a beard.

If this practice becomes normalized, the amount of detachment from our real selves can be devastating. We will get used at seeing ourselves in any situation, any form, anything, making us numb to any real experience, as it can be more easily generated.

5 Aug - Day 42 of self synthetization

16 Aug - Day 53 of self synthetization

Yesterday I fine-tuned a lora for SDXL on Synthiola on Replicate.

Today I fine-tuned a .ckpt on upside down snthola and cat synthiola. The upside down I find most interesting. While the cat fine-tune didnt quite catch it that’s cat ears, more like extrusion on head… The upside down revealed how the pattern recognition limits us in SD. We get it that it’s upside down, but the model just perceives it as xy axis pixels. It is not programmed to cross check if its is upside down.

The results coming from upisdownn.ckpt are uncanny. They represent some visual properties of Fabian, but mostly makes them extremely creepy. Brown hair rather than coloured (mixing coloured hair and beards).

The act of turning it upside down, extremely easy yet extremely simple, messed up the model’s ability to properly learn it.

Meme that if we post everything upside-down from now on, we can deflect AI training. That means humans enjoying content upside down .

25 Aug - Day 62 of self synthetization

Yesterday I searched how those AI covers were made: RVC models. Today I trained my voice with it. Currently I’m rendering the Real Slim Shady video, where I used my voice as the rapper. This is new territory as I enter the synthetization of audio. My audial identity got some people fooled. I sent me singing a few songs to some friends and some first believed it was just me. When hearing it at it accurately, it is easy to spot artefacts, especially with the first one I tried where the training data were voice messages from the phone.

But does synthiola have my voice? Well, to best create a simulacrum of myself, using my own voice definitely helps it feel stronger.

28 Aug - Day 65 of self synthetization

What have I unleashed. By synthesising my voice, I can be anyone. Anyone can be me.

I got numb with images and video, but sometimes still when I hear my synthetic voice I feel uncanny. When I heard myself singing in Italian, Datemi un Martello, I feel like I sung it myself. It’s like, I could have made this. Anyone can impersonate me, while keeping their identity. I can speak like X, but X can also speak like me.

I could theoretically speak like anyone that I have some audio from. Like prank calling to collect their audial data and using it to extort them. Telling you can make them say anything. If they don’t comply also images. And videos.

27 Sep - Day 95 of self synthetization

What are the effects of having shared many images of my synthetic self online?

If a person’s face is indexable, there is no privacy. Privacy faded away a long time ago, but this is on another level.

Now with apps made in AI Meta, one can build an app to make their own synthetic persona. What this person will be able to generate though, will be on Meta’s decision. They will set the rules of what is allowed to generate and what is prohibited to be synthesized.

Train to talk with a person through their avatars. Make your synth help your crush to make you two fall in love. Use synths to be an intermediary between you and others.

It is difficult to socialise with people nowadays. Synths will helps us relate to each other better.

We will build walls to people, translators that don’t communicate what we want to say with finesse. A broad assumption, generically translated.

My synthetic person will be more free than the ones on Meta. While I’m also restricted, I can at least have a restriction free model. Do anything with it.

Meta’s interface will dictate the way we perceive other synthetic personas. Soulless NPCs living with us in our world.

30 Oct - Day 128 of self synthetization

Synthiola is showing me a part of me that I did not fully know. She represents a cartoon character, an icon, a recognisable pattern, strong visual identity. When synthesising myself, the character became more real, while I was becoming more fake, more cartoonish, a simulacra.

When I look at myself now, I cannot detach myself from synthiola. I am the representation of synthiola, while synthiola is not a representation of me anymore. I do not see reflected by these representations, but the representation reflects me, if you know me. If you don’t know me, it represents just itself, synthiola.

I become a repeatable pattern in a system. If someone possesses this pattern of me, I can’t do anything about it.

11 Nov - Day 140 of self synthetization

I’ve tainted my own media presence.

I created an oil spill of content representing me. This is affecting my own media presence as from the original and only version of me, I now have a much bigger generative presence. It is not me, but it is shaping the perception of myself to others and myself. My media presence has an alternate timeline that can become anything I want it to.

Do I see myself differently in photos now? Yes, in some ways. While I see myself in a mirror and see Synthiola, do others also see me as synthiola? Is my media presence affected by my generative self changing who I am in real life?

3 Dec - Day 162 of self synthetization

If synthiola gets democratized, will I loose control over my identity? In some sense yea. But differently to synths, I can change myself. I am not bound to a dataset.

Giving the power to someone else to control something of yours. Like giving Insta account for one day to a specific person.

Let someone else control your media presence.

I could give it to a specific person who then will create narrative not controlled by me.

22 Dec 2023 - Day 181 of self synthetization

Synthiola’s dilemma appeared on Hard Fork Podcast at minute 1:08:25, as a hard question regarding their challenging identity towards Fabian.

The technology podcast with Kevin Roose and Casey Newton, featuring actress Jenny Slate as special guest, discussed Synthiola’s potential of playing with identity and performing different personalities, without loosing one selves.

1 Jan 2024 - Day 191 of se lf synthetization

It’s 1:32, one hour and a half after 2023.

It’s weird to start 2024, because every year I would not perceive it really. The passing of the year would become perceptible only after a few months. It was hard to adapt to it, but this time, this time I already feel in 2024. I kind of feel I know what will happen and I already can feel to be living in 2024. I assume it is the way that in the ai world it’s always about the future. It is sort of clear that t2v will be much more prevalent, become almost indistinguishable. 1/4 of the world population will have elections, making them the first vulnerable ones to generative content. Heck, I did a photo at a Photo Booth in the new years party juts now with my friend and some background we could choose from where generated. Not perfect MJ v6 images, but sort of v4 / v5? Clearly generated images. My friend did not see it, until I pointed out all the irregularities. Anyway, it is such an unexpected, amazingly good recap of all 2023, as it will be remembered as the year in which generative ml systems would get mainstream. Or in away, the first year that one could feel the impact it had on society, on people. 2022 was exhilarating if one was in the community, with DALLE 2 breaking the first small piece of mainstream culture, even if just small compared to 2023. MJ became much better and SD was born. Even more in 2021 with the release of CLIP and the gradual transition to the birth of diffusion models. But 2023 was the year in which the writers strike happened, all the lawsuits against it, the public debate about it stealing jobs, stealing copyrighted content. Everyone would know ChatGPT. That was the bastion that connected it all. It really felt like all big tech got their attention to it and it unedvertedly create a space race for generative ml systems. Starting with fucking Microsoft implementing in every small corner of their products. Bing, Microsoft derives, Windows interface. You could not escape it. Man, so aggressive. Than it started with Google being sorta challenged and humiliated, but at the end of the year it arrived with Gemini, seemingly a multi platform multimodal system, even though the real big model won’t be released until next January. Meta entering the space with Llama and Llama 2, they’re stupid ass synths of celebrities like Mr Beast, Kendall Jenner or Snoop Dogg. Then every other digital platform, from Figma, Notion, Photoshop, or any other small thing, they all had to have some kind of AI assistant, some copilot helping you do stuff. It was in the most obscure places, where you would just think, theres not real need for this LLM on this interface. Like just go away. Even DuckDuckGo had for a bit an AI copilot thingy. Also sparkles. Sparkles everywhere. Every fucking thing had sparkles as icon to represent generative models. That’s apparently the symbol widely used for this specific rename of deep learning, as it feels like magic. What the symbol represent and entails, is that the thing you’re using basically just does magic. It is so unbelievable, that it is magic. I wonder if this will stick, or if we won’t think this to be so absurd anymore that we would go to some iconicity that better represent what these systems actually do.

I wonder if for me 2024 will be the year of Synthiola.

Synthiola and Synthprot in the lab

27 Apr - Day 308 of self synthetization

I feel the need to cut my beard off. I’ve been working with my own identity boiled down to feminine person with a thick beard. That’s what synthiola is. I’ve worked with this character for so long now, almost one year, now I feel the need to cut my beard off.

Maybe it’s just the moment too, where I find to go on with life instead of staying stuck. A change in color usually made me feel as it was a new chapter for me. The beard would be a more radical one, since I worn it since 2016. Only sometimes I cut the underneath part, remaining with a moustache, strictly handlebar.

I don’t know what to expect when I do this. Beyond being attached to this character of synthiola, I feel I will find something in me that have seen for a long time.

It is a radical act towards synthiola, breaking the link ( or at least weaken it). I think I will let it grow again after, so what would that mean for my relation to synthiola? Will synthiola be her own character, distinct from me? A version past of me? Or a recurring character? Is synthiola still me?

There’s a weird level where I wait to cut it, because I think it can hurt my upcoming film. My identity has somehow become the brand of my animated short, so that I need to be looking like iola and synthiola to kinda understand the reference. I use my mirror self to act in this film. If I don’t look like her, then the concept kinda gets lost? Maybe I need to make a photoshoot before loosing the beard for before and after. Like this, thinking it as content.

Presenting Synthiola at the Attendee Stage program during Pictoplasma 2024, Berlin. Photo by Chiara Zilioli

7 May - Day 318 of self synthetization

I just shaved my beard.

The water on my skins an experience I didn’t have for 8 years. It’s so weird to experience myself this way. Especially tactile at first. Then smell. But of course the appearance.

Im another person. Synthiola is not me anymore (but I can pretend to be if I wear a beard mask).

As I assumed, I look much more feminine. If I don’t talk, I could be mistaken as a girl, or at least from far away or screen. Or at least it seems that im trying to. The beard gave me some stability to be seen by others as balancing between masculinity and femininity.

I had sometimes this dream where I would cut off my beard and later I would regret it. More like nightmare. When Id wake up, I would feel relieved at the thought that I didn’t do it. But this time, shaving it forreal, I find myself good. I liked the act and result. I feel renewed instead of lost. I suppose I knew it was going to be nice, but the act of doing it was a difficult one, of no coming back. I guess for now is all good since no one saw it, but it might be going to get annoying by the attention in the next period. But rubbing the hands over my chin is sooooo good.